Matthew Lindfield Seager

Computers are fascinating!

TIL 'a'='a ' is true in standards compliant SQL.

Turns out, if the strings are different lengths, one is padded with spaces before comparing them.

Was super confusing at first when RIGHT(Comment, 1)='; ' was finding results!

Better teardown in Minitest (than using an ensure section - www.matt17r.com/2019/06/1…) can be achieved with (wait for it!) #teardown.

You learn something new every day, sometimes two things! 🙂

UPDATE

#teardown is probably a better way of achieving the desired outcome

MiniTest allows ensure blocks, even with Rails syntax 🎉

I already knew Ruby methods (and classes) could have ensure blocks:

def some_method

do_something_that_might_blow_up

ensure

tidy_up_regardless

end

…and I knew MiniTest tests are just standard ruby methods:

def test_some_functionality

assert true

end

…but I wasn’t sure if the two worked together using Rails’ test macro that lets you write nicer test names:

test "the truth" do

assert true

end

After spending some too much time trying to find an answer I eventually realised I should just try it out and see:

test 'the truth' do

assert true

raise StandardError

ensure

refute false

puts 'Clean up!'

end

And it worked! I see ‘Clean up!’ in the console and both assertions get called (in addition to the error being raised):

1 tests, 2 assertions, 0 failures, 1 errors, 0 skips

OpenStructs are SLOW, at least according to an article from a few years ago - palexander.posthaven.com/ruby-data…

I can’t vouch for how realistic the test is but running it again today as is shows that hashes have improved significantly. They are now faster than classes and ONE HUNDRED times faster than OpenStructs in this scenario.

user system total real

hash: 1.066739 0.005513 1.072252 ( 1.098668)

openstruct: 107.167921 0.261942 107.429863 (107.722112)

struct: 2.185639 0.002021 2.187660 ( 2.191069)

class: 1.710577 0.001941 1.712518 ( 1.715534)

Don’t prematurely optimise but if you’re doing anything that involves a lot of OpenStruct creation (which is where the overhead lies) it might be best to choose a different data structure.

On a related note, I’m struggling to figure out when passing around a loosely defined OpenStruct would ever be preferable to a class. Seems like an OpenStruct would unnecessarily couple the creator and the consumer.

More academically, I also re-ran the other benchmark, this time increasing the count to 100,000,000 and adding in a method that used positional arguments.

user system total real

keywords: 23.196255 0.054049 23.250304 ( 23.366176)

positional: 19.664959 0.044322 19.709281 ( 19.789321)

hash: 31.235545 0.039376 31.274921 ( 31.328692)

In this case I was gratified to see that keyword arguments are only slightly slower than positional ones. I much prefer keyword arguments as they are clearly defined in the method signature, raise helpful ArgumentErrors when you leave them out or type them wrong and are much easier to reason about when reading code where the method gets called.

Postgres tip:

ALTER DEFAULT PRIVILEGES only applies the specified privileges to objects created by the role specified by FOR USER (or the role who ran the ALTER statement if no user specified)

Life tip:

Stack Overflow community is awesome! Great resource for sharing knowledge!

Automating Minitest in Rails 6

I’m building a new app in Rails 6 (6.0.0.rc1) and while I use RSpec at work I’ve been enjoying using Minitest in my hobby projects.

I’d love to know if there’s ways to improve my setup but here’s how I set up my app to run Minitest tests automatically with Guard (https://github.com/guard/guard)

Set Up

Install the necessary gems in the

:developmentgroup in ‘Gemfile’ and runbundle. While we’re at it lets give our minitest results a makeover withminitest-reporters:# Gemfile group :development do ... gem 'guard' gem 'guard-minitest' end group :test do ... gem 'minitest-reporters' end- Finish the makeover by adding a few configuration tweaks into our test helper:

# test/test_helper.rb require 'rails/test_help' # existing line... require 'minitest/reporters' Minitest::Reporters.use!( Minitest::Reporters::ProgressReporter.new(color: true), ENV, Minitest.backtrace_filter )- Finish the makeover by adding a few configuration tweaks into our test helper:

Set up a Guardfile to watch certain files and folders. Originally I used

bundle exec guard init minitestbut I ended up deleting/rewriting most of the file:# Guardfile guard :minitest do # Run everything within 'test' if the test helper changes watch(%r{^test/test_helper\.rb$}) { 'test' } # Run everything within 'test/system' if ApplicationSystemTestCase changes watch(%r{^test/application_system_test_case\.rb$}) { 'test/system' } # Run the corresponding test anytime something within 'app' changes # e.g. 'app/models/example.rb' => 'test/models/example_test.rb' watch(%r{^app/(.+)\.rb$}) { |m| "test/#{m[1]}_test.rb" } # Run a test any time it changes watch(%r{^test/.+_test\.rb$}) # Run everything in or below 'test/controllers' everytime # ApplicationController changes # watch(%r{^app/controllers/application_controller\.rb$}) do # 'test/controllers' # end # Run integration test every time a corresponding controller changes # watch(%r{^app/controllers/(.+)_controller\.rb$}) do |m| # "test/integration/#{m[1]}_test.rb" # end # Run mailer tests when mailer views change # watch(%r{^app/views/(.+)_mailer/.+}) do |m| # "test/mailers/#{m[1]}_mailer_test.rb" # end end- Add relevant testing information to the README. I added the recommended approach (using

bundle exec guardto automate the running of tests) but also included information on how to run tests individually or in groups. - Commit all the changes to Git with a meaningful commit message (i.e. less what, more why)

Caveats

- At the time of writing ‘guard-minitest’ (https://github.com/guard/guard-minitest) has not been updated for 18 months. It’s likely it’s complete and stable but it may not be getting the attention it deserves… even just a minor Rails 6 update to the template would be a good sign.

- I’ve commented out the controller and integration tests in my Guardfile because I don’t have any of those (yet?). I’m still finding my way but I like the idea of testing all the bits and pieces in isolation and then just having a smallish number of System tests that exercise the major functionality.

- The minitest-reporters gem and associated configuration are both optional. I just like a simple display with a splash of colour to easily see Red vs Green test states.

- Add relevant testing information to the README. I added the recommended approach (using

I dislike the “vote for your desired feature” approach to product development. It seems like a cop out to me (especially on smaller products).

Rather than doing the hard but necessary work of understanding (and anticipating!) customer needs the task gets farmed out to the crowd.

Enjoyed seeing Aladdin at the drive-in tonight! Weather held off, we had a lovely picnic dinner with friends, the movie was fun and our car started again 🙂

Only downside was lack of volume… wonder if it’s related to low transmission power (to keep signal hyper local)? 🤔

I deployed a new Rails app to Heroku tonight.

It’s so ridiculously easy, especially now I’ve done it once or twice and have everything installed.

I’m very ~tight~ fiscally conservative though, so I’ll have to wait and see if I’m still happy once I’m off the free dynos 🤑

Today I learned SQL Server’s default encoding automatically equates similar characters to each other for sorting/searching. Normally that’s a good thing (e.g. so c and ç sort the same way) but today I wanted to find a specific unicode character…

I needed to find (and replace) all “Narrow Non-Break Space” characters but searching for LIKE '%' + NCHAR(8239) + '%' was also finding ordinary spaces.

The answer was to tell it to use a binary comparison when evaluating that field with COLLATE Latin1_General_BIN:

SELECT *

FROM Comments

WHERE Comment COLLATE Latin1_General_BIN LIKE '%' + NCHAR(8239) + '%'

to find them and:

UPDATE Comments

SET Comment = REPLACE(

Comment COLLATE Latin1_General_BIN,

NCHAR(8239) COLLATE Latin1_General_BIN,

' '

)

to replace them with regular spaces.

Priority Notifications is a new Teams feature. It allows a user to mark a chat message in Teams as “Urgent”. Urgent Messages notify users repeatedly for a period of 20 minutes or until messages are picked up and read by the recipient

Such a user hostile feature. Do not want!

Yesterday I posted about the one benefit of GraphQL I actually want… www.matt17r.com/2019/06/1…

This clip is the particular snippet of the conversation I was referring to

GraphQL vs(?) REST

I’ve been coming across a lot of GraphQL listenings/readings lately. Below is a small sampling and my very brief impressions.

The fourth link is the one that excites me most (spoiler, it’s not actually GraphQL).

- Recent episode(s) of The Bikeshed, particularly episode 198 (and even more particularly, 1 minute starting at 15:24): I really like the idea of GraphQL helping them to ask the right questions - questions about business processes rather than just data flow and endpoints

- Ruby on Rails 273 with Shawnee Gao from Square: Interesting use case but not really applicable in my situation

- Evil Martians tutorial/blog post: Wow! That’s only part 1 and it already looks like a lot of work!!! And of the three benefits they mention (not overfetching, being strongly typed and schema introspection) the last two seem to come with pluses and minuses of their own

- Remote Ruby with Lee Richmond from Bloomberg: Best of both worlds!?!? According to Lee, Graphiti offers GraphQL-like fetching but with a Rails-like RESTful approach that brings sensible, predictable conventions to API design.

Based on that last link I’m VERY keen to try out Graphiti (and friends):

- Why Graphiti?

- Intro video on YouTube

- Quickstart tutorial so you can take it for a spin yourself

I’m curious to know if Graphiti can offer the same benefit Chris Toomey mentioned that GraphQL offers of encouraging them to ask the right questions.

Fully Facebook Free (Finally)

I just deleted my WhatsApp account despite it being the best (only) way to get information from some groups.

Having previosuly deleted my Instagram account (and not having had a Facebook account for several years now) I think I’m finally 100% Facebook free.

I don’t trust Facebook with my personal data and it doesn’t get much more personal than my address book. Not only does it contain the names of everyone I know (or used to know), but for many of them it includes their birthdate, phone number(s), email address(es), home or work address and more.

For a while I tried to compromise by turning off access to my Contacts for WhatsApp but it wouldn’t let me start a new message thread with that off, not even by typing in the phone number manually.

I’m under no illusion that Facebook already has all that information and more (from other people’s address books) and I know they maintain shadow profiles on people who don’t have an active Facebook profile but I refuse to willingly give them that information myself.

Keen to watch the RubyConf 2019 talks!

https://www.youtube.com/playlist?list=PLE7tQUdRKcyaOq3HlRm9h_Q_WhWKqm5xc

Just need to find a spare 40-50 hours 😆

I’ll be interested to see how “Sign In With Apple” develops.

One of the key complaints about Apple in schools is that they don’t have an identity management story. Every school uses G Suite, O365/AD, or both for identity.

Maybe one day SIWA will have an edu variant???

PSA: Having a Single Source of Truth is not about consolidating every bit of data in one enormous system.

Make sure “every data element is stored exactly once” (https://en.wikipedia.org/wiki/Single_source_of_truth) but each element should live in the system that makes most sense.

Great WWDC keynote today! Jam packed and well worth watching (especially compared to the “Services” event in March).

WatchOS updates were the standout for me! Activity trends, stand-alone audio and greater phone independence are all solid improvements.

Migrating from String to Citext in Postgres and Rails

I included a uniqueness constraint in my app to prevent what appears to be the same entry from appearing in a list multiple times and confusing people.

I later realised that I hadn’t taken PostgreSQL’s case sensitivity into account so multiple otherwise identical names were allowed, potentially confusing people.

-- SELECT * FROM tags

-----------

name

-----------

john

JOHN

jOhN

-----------

(3 rows)

Rather than slinging data around in the model validations AND again in the database constraints I decided the best way to deal with it would be to convert the column from string to citext (case insensitive text) and let Postgres deal with it.

The first thing I tried was to migrate the column to citext:

def change

change_column :tags, :name, :citext

end

The result was an error stating that Postgres doesn’t know about citext; PG::UndefinedObject: ERROR: type "citext" does not exist. That was easily fixed, I added enable_extension 'citext' to the migration and ran rails db:migrate again.

The next problem I encountered was when I tried to rollback. Whenever I write a migration I try to remember to test that it works backwards as well as forwards. In this case I received an ActiveRecord::IrreversibleMigration error due to the fact that change_column is not reversible (the current way it’s defined doesn’t let you specify what column type it’s being changed from so the rollback has no idea what to change it back to).

I could have used a reversible block for each direction but I find separate up and down methods to be clearer. In this case I renamed change to up and duplicated it (with two minor changes) for down:

def up

enable_extension 'citext'

change_column :tags, :name, :citext

end

def down

disable_extension 'citext'

change_column :tags, :name, :string

end

The rollback still failed though, this time with a mysterious error stating the column doesn’t exist:

PG::UndefinedColumn: ERROR: column "name" of relation "tags" does not exist

After double checking for typos, scratching my head a bit and then triple checking for spelling mistakes it finally dawned on me what I’d done. By disabling the citext extension too early I’d effectively dropped a cloak of invisibility over the citext column. Postgres could no longer see it.

Once I swapped the order of the method calls in the down method the migration worked in both directions and everything was hunky dory. Everything, that is, except for one little niggle…

I thought about future me and realised what could happen in six months time (or six days for that matter) once I’d forgotten all about this little disappearing column problem. Some day in the future I’ll probably copy this migration into another file, or maybe even another app.

The up migration will work, the down migration will work and I’ll be happy as larry… right up until I try to rollback even further. After this roll back the citext extension will be gone and rolling back the next migration that refers to a citext column will raise an undefined column error… in a completely different file!

The chances of all those things happening are vanishingly small but if it were to happen I can see myself spending hours pulling my hair out trying to figure it out! If fixing it were difficult I might decide to accept the (tiny) risk of it happening but in this case defending against it is as easy as splitting it out into two migrations and providing the reason why in my commit message:

# migration 1.rb

class EnableCitext < ActiveRecord::Migration[6.0]

def change

enable_extension 'citext'

end

end

# migration 2.rb

class ConvertTagNameToCaseInsensitive < ActiveRecord::Migration[6.0]

def up

change_column :tags, :name, :citext

end

def down

change_column :tags, :name, :string

end

end

Now, if I ever copy and paste the contents of ConvertTagNameToCaseInsensitive into another migration it will either work perfectly (in both directions) or I’ll get a sensible error reminding me to enable the citext extension first.

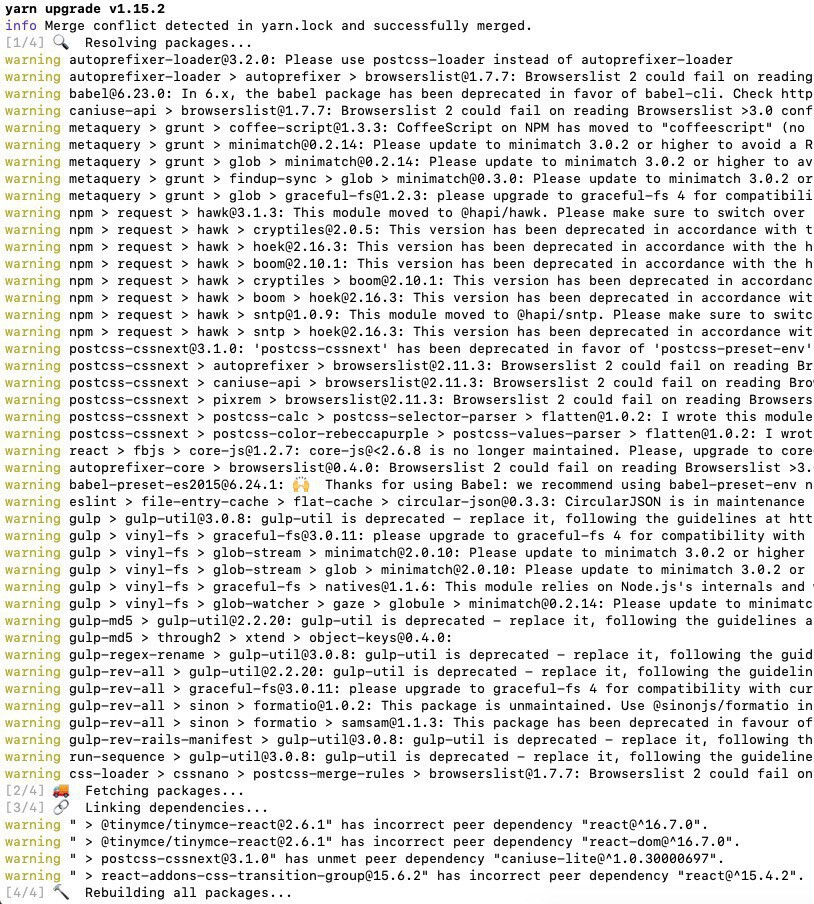

yarn upgrade:

- 47 lines of warnings (some only fit because I made my terminal 368 characters wide!)

- laptop fan forced into overdrive

- 23 minutes wasted trying to get yarn to work (yet again!)

I’m trying not to be a curmudgeon but is this really better than Sprockets?

Validating Data in Rails: Database Constraints, Model Validations or Client-side Checks?

I stumbled across an old StackOverflow question today about when to use model validations as opposed to database constraints in Rails apps. The question was originally about Rails 3.1 but whether you’re using Rails 3 or Rails 6 the correct answer is, of course, it depends.

Both database constraints and model validations exist for a reason. In addition to those two, I think it’s also worth considering where client-side checks fit in.

My short answer is:

- always write model validations

- in most cases back them up with database constraints

- sometimes add on client-side checks

As with most things, stick with these simple rules while you’re learning and you won’t go far wrong. Later on you can start to break them, once you know why you’re breaking them.

My longer (“it depends”) answer and my reasons for the rules above are below.

Model Validations

Model validations are your bread and butter as a Rails developer. They aren’t as close to the customer as client-side checks, requiring a round trip to the server to find out a form is invalid, but they are usually quick enough. They aren’t quite as robust as database constraints, but they are still quite powerful and they are much more user friendly.

Because they allow you to validate multiple requirements and present a consolidated error to the customer this is where you should start.

Their main downsides are that they don’t necessarily enforce data integrity (they lack robustness) and performance. To address the robustness concerns we layer on database constraints. If (and only if) you identify that model validations are detracting from the customer experience you can add client-side checks to improve the situation.

Database Constraints

Database constraints are lower level and much more robust. They aren’t very customer friendly when used on their own but should be added on top of (below?) model validations in most cases.

Whilst model validations are good (and not easily circumvented by customers), they are easy to circumvent by a developer which can lead to inconsistent data:

- A developer might accidentally circumvent them via a race condition or saving to the database from an alternate code path (or application)

- They can also be deliberately circumvented by setting attributes directly with

update_attributeorupdate_columnor by skipping validations entirely with.save(validate: false)

By contrast, database constraints are very difficult to accidentally circumvent by the customer or the developer (although a determined developer can deliberately circumvent them).

The sorts of data integrity issues that constraints can prevent include:

- non-unique IDs from multiple users of your Rails app (see ‘race condition’ link above)

- orphaned records when a parent record is deleted

- duplicate “has_one” records

- other invalid data from other apps or from developers skipping constraint checks

Add database constraints to most model validations as an additional layer of protection. If there is a bug in your app, it’s better for your app to throw up an ugly error to the user straight away than for it to quietly save invalid data that eventually causes new bugs or leads to data corruption/loss.

The main potential downside to constraints is that you might need to make them database specific in which case you’ll lose the abstraction benefits of ActiveRecord::Migration.

Client-side Checks

Client-side checks (e.g. using JavaScript or HTML 5’s required attribute - which came out after the question was asked) are the closest to the customer and helpful for providing immediate feedback without any round trip to the server.

Add these on top of model validations when you can do so tastefully^ and you need to improve the customer [user] experience. This is particularly important for customers on low-bandwidth or high-latency connections (e.g. developing markets, remote locations, poor mobile reception or plane/hotel/ship wifi).

Keep in mind they are very easy to circumvent, unintentionally (JavaScript is disabled/blocked) or deliberately (by editing the source). They should never be relied upon for data integrity.

^ Also keep in mind that they are very easy to misuse/abuse. Make sure you avoid anti-patterns such as showing errors on fields that haven’t been filled in yet or constantly flashing an email field red/green (invalid/valid) on every keystroke.

Caveats

- There is some amount of repetition between the levels; some people might object to using more than one level on the grounds it isn’t DRY. I think this is one of those cases where a little bit of repetition increases application quality substantially

- On that note, make sure all 3 levels (if you use them) are consistent:

- Your customers will be annoyed if the client-side check gives their password a big green tick but then an error comes back from the model validation saying it’s too short

- It’s possible to have passing model tests even though the data can’t be saved to the database (if your constraints don’t match your validations and you’ve mocked out ActiveRecord)

- Raising a validation error on a hidden or calculated field that the customer can’t control is unhelpful at best and hugely frustrating at worst.

When Not to Use Model Validations

As alluded to above there are some situations where model validations should be omitted or removed and only a database constraint used. The main use case for this is when the validation is not helpful to (actionable by) the customer.

For example, imagine a registration form requires an email address and a password but the Person model requires email_address and hashed_password. If for some reason a bug causes hashed_password to be nil the form submission will fail with a model validation message saying “hashed password can not be blank”. This is confusing and unhelpful to the customer plus it potentially masks an actual bug in your code.

If you remove the model validation from hashed_password but keep the database constraint, the same attempt to register will cause an SQL error (which will then be trapped by your error reporting system). In this case it’s much clearer to the customer that there is a bug in the software (not just a problem with the password they’re registering) and they hopefully won’t retry the submission elventy bajillion times.

Tracking Rails Migrations

After removing some Rails migrations today I couldn’t rollback my database and I realised I had no idea how Rails keeps track of which migrations it has run.

I found out they live in a Rails managed table schema_migrations, in a single VARCHAR column version (containing the datetime of each migration as a 16 digit string).

Knowing that isn’t particularly helpful because if you manually delete entries from that table you also need to manually revert the changes made in the corresponding migration. I do like to understand the technology behind the magic though.

If you find yourself in a similar situation what you probably want, and what I ended up doing after chasing this little tangent, is rails db:migrate:reset.

I’ve been enjoying listening to the Heroku podcast lately.

Dataclips seems like a very cool technology: devcenter.heroku.com/articles/…

Great for quick (shareable) data queries or you can even use it as a super simple JSON API!

Adding an 'in_ticks' method to Numeric in Ruby

Certain fields in Active Directory are stored in “ticks” (1 tick == 100 nanoseconds).

To make using ticks easier in an app I wanted to add an additional time method to Numeric (a lot like Active Support does… https://github.com/rails/rails/blob/master/activesupport/lib/active_support/core_ext/numeric/time.rb)

It’s probably not the best way of doing it but in the end I added it using an initialiser:

# 'config/initializers/numeric.rb'

# Be sure to restart your server when you modify this file.

class Numeric

# Returns the number of ticks (100 nanoseconds) equivalent to the seconds provided.

# Used with the standard time durations.

#

# 2.in_ticks # => 20_000_000

# 1.hour.in_ticks # => 36_000_000_000

def in_ticks

self * 10_000_000

end

end